LLMs vs. Traditional ML Algorithms - A Pragmatic Comparison

LLMs (like GPT-4) excel in natural language understanding and generation tasks, offering powerful capabilities for processing and generating human language. They are not designed for handling structured data, clustering, image analysis, or ranking structured data tasks, where other machine learning models and algorithms would be more appropriate.

Here's what LLMs CAN do:

- Sentiment Analysis: LLMs can be used to analyze customer reviews, social media posts, or other textual data to determine sentiment (positive, negative, or neutral) and help businesses understand their audience's perception of products, services, or content.

- Text Classification and Categorization: LLMs can classify text into predefined categories, such as topic detection, spam filtering, or content moderation, allowing businesses to automate content organization and management tasks.

- Machine Translation: LLMs can be employed for translating text between languages, enabling businesses to operate in multiple markets and support multilingual customers more effectively.

- Question-Answering Systems: LLMs can be used to develop intelligent question-answering systems, such as virtual assistants or chatbots, to provide customer support, technical assistance, or personalized recommendations.

- Text Summarization: LLMs can generate concise summaries of long documents, articles, or reports, saving time for professionals who need to digest large volumes of information quickly.

- Content Generation: LLMs can be leveraged to generate text content for marketing, advertising, social media, or other purposes, helping businesses create engaging and contextually relevant content with less effort.

- Knowledge Extraction and Relation Extraction: LLMs can extract information from unstructured text data, such as entities, relationships, or events, allowing businesses to gain insights and make data-driven decisions.

What's new with LLMs?

- Improved Performance: LLMs have shown significant improvements in performance across a wide range of NLP tasks, often surpassing previous state-of-the-art models and sometimes approaching human-level performance.

- Few-shot Learning: LLMs can demonstrate an ability to learn new tasks with limited or no additional training by leveraging the vast knowledge captured during pretraining through In-context learning (ICL).

- Multitask and Multilingual Capabilities: LLMs can handle multiple tasks simultaneously and support multiple languages, making them more versatile and adaptable to various use cases.

- Emergent Capabilities: LLMs can exhibit emergent abilities not explicitly present in smaller models but become apparent in larger ones, expanding the potential range of applications and use cases.

- LLM is data leakage: These models are trained on enormous datasets, including the data that may contain sensitive and private information. As these models generate outputs based on the patterns they've learned, there is a risk of disclosing personal information included in their training data, leading to a privacy breaches that might result in fines, eg. by the GDPR compliance rules.

- Abuse of Technology: LLMs are powerful tools that can generate human-like text, which could be misused by ill-intentioned individuals or entities. For instance, they could be employed to produce mass misinformation, foster propaganda, or even engineer more convincing phishing attacks.

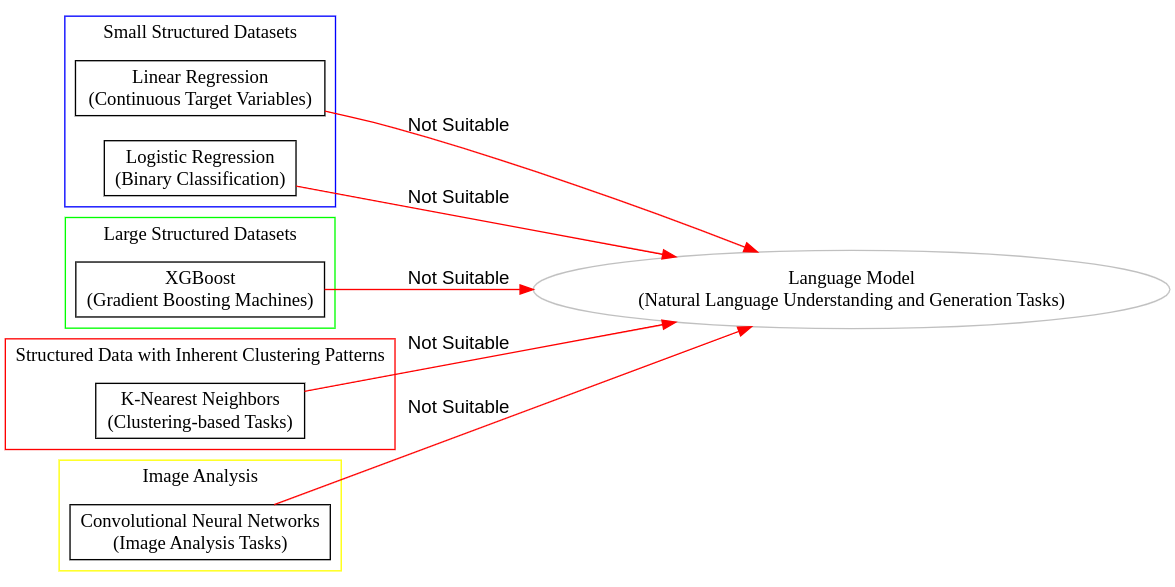

Small Structured Datasets

For small structured datasets (i.e., tabular data with a limited number of samples), linear regression (for continuous target variables) or logistic regression (for binary classification) are recommended. These models are simple, interpretable, and computationally efficient, making them suitable for situations where there is not enough data to train more complex models. Linear and logistic regression models are designed for structured data and focus on simple, interpretable relationships between input features and target variables. In contrast, LLMs are designed for natural language understanding and generation tasks, excelling in processing and generating text data rather than structured data.

Large Structured Datasets

For large structured datasets, gradient boosting machines (GBMs) like XGBoost are often the best choice. XGBoost is an optimized implementation of GBMs, which are an ensemble learning method that builds a series of decision trees sequentially, with each tree learning to correct the errors of its predecessor. This technique allows for powerful modeling of complex patterns in the data and has been known to perform well on a variety of tasks. XGBoost and other gradient boosted trees are designed to handle large structured datasets and learn complex patterns in tabular data. LLMs, on the other hand, are specialized in processing unstructured text data, enabling advanced natural language understanding and generation capabilities, which are not applicable to structured data tasks.

Structured Data with Inherent Clustering Patterns

For structured data with inherent clustering patterns (i.e., samples belonging to distinct groups), the k-nearest neighbors (KNN) algorithm can be effective. KNN is a non-parametric, instance-based learning method that classifies new instances based on the majority class of their k-nearest neighbors in the feature space. This method works well when the underlying data structure exhibits clear clusters or groups. KNN is suited for clustering-based tasks in structured data, where samples belong to distinct groups. LLMs, however, are not designed for clustering tasks but are tailored for natural language processing tasks, offering powerful capabilities for understanding and generating human language.

Image Analysis

For image analysis tasks, convolutional neural networks (CNNs) are often the go-to choice. CNNs are a type of deep learning model specifically designed to handle grid-like data, such as images. They use convolutional layers to scan local regions of the input image, capturing spatial features and hierarchies. CNNs have been highly successful in tasks like image classification, object detection, and segmentation. CNNs are specifically designed for image analysis tasks, capturing spatial features and hierarchies in grid-like data. LLMs, on the other hand, are focused on natural language understanding and generation tasks and are not suited for image analysis.

Sparring Time with Opsie!

Q: While LLMs have shown significant improvements in natural language processing tasks, isn't it a concern that their large size and complexity make them difficult to interpret and understand? How can businesses ensure that these models are reliable and make accurate predictions when the internal workings of these models are often opaque?

Opsie: LLMs indeed often have a black-box nature, which can make interpretation and understanding a challenge. To ensure reliability, businesses often employ techniques such as AI explainability, which attempts to provide insights into how models make decisions. Additionally, validation using diverse and representative datasets, coupled with regular monitoring, can ensure that the model's outputs are accurate and reliable.

Q: Given that sentiment can be highly contextual and subject to individual interpretations, how can we be certain that an LLM can accurately capture sentiment from diverse sources and across multiple contexts?

Opsie: Sentiment analysis with LLMs is a challenging task due to its subjective and context-dependent nature. Ensuring accurate sentiment analysis requires diverse and representative training data and rigorous validation processes. Fine-tuning the model with domain-specific data can also improve accuracy across different contexts.

Q: LLMs rely on pre-training on extensive datasets. How do businesses handle potential bias in these models that could affect text classification and categorization? Is there any assurance of fairness?

Opsie: Bias in pre-training datasets is indeed a concern. Mitigation strategies include using diverse and representative datasets for training, implementing regular audits for bias, and deploying robust fairness metrics. It's important to remember that ensuring fairness is an ongoing process, not a one-time task.

Q: Machine translation is a complex task that often requires understanding of cultural nuances and idiomatic expressions. How reliable can machine translation be when performed by LLMs, given these challenges?

Opsie: LLMs are improving in this domain but understanding cultural nuances and idiomatic expressions remain challenging. The reliability of machine translation can be improved by using domain-specific training data and leveraging techniques like transfer learning. Regular validation against human translations is also essential.

Q: While LLMs can generate summaries or new content, how can businesses ensure the quality of this generated content? Is there a risk that the model might generate misleading or incorrect content?

Opsie: Ensuring quality in generated content involves setting up stringent validation processes and regular monitoring. Additionally, businesses can fine-tune models with domain-specific data to improve relevance and accuracy. Human review is also often used to control the quality of the generated content.

Q: As LLMs simulate human-like conversations, there's a concern about their ethical use, especially when they're indistinguishable from humans. Could this lead to misuse or manipulation?

Opsie: The ethical use of conversational AI is a significant concern. Businesses should adhere to clear guidelines on the responsible use of AI, ensuring transparency and user consent. These systems should also be designed to clearly disclose that the user is interacting with an AI.

Q: Given that LLMs extract knowledge from pre-existing data, how can businesses ensure the relevance and timeliness of this extracted knowledge? Is there a risk that LLMs might rely on outdated or incorrect information?

Opsie: Ensuring relevance and timeliness involves regularly updating the training data and combining AI models with current data sources. Additionally, monitoring the outputs and regular validation can prevent reliance on outdated or incorrect information.

Q: When using simpler models like linear regression or more complex ones like gradient boosting machines, how can businesses ensure that these models capture the complexity and nuances of their data? Could these models potentially oversimplify or overfit the data?

Opsie: When dealing with structured datasets, the choice of model should be appropriate for the complexity and size of the data. Techniques such as cross-validation, feature importance analysis, and careful hyperparameter tuning can help avoid oversimplification or overfitting.

Q: How well do models like KNN handle cases where the boundaries between clusters are not clearly defined or when outliers are present? Isn't there a risk of misclassification?

Opsie: Models like KNN can struggle with unclear boundaries or outliers. Various techniques like anomaly detection, robust clustering algorithms, or dimensionality reduction techniques can help mitigate these issues. Regular validation using ground truth data can also help ensure accurate classification.

Q: Although CNNs are designed for image analysis tasks, they require large amounts of labeled data. How can businesses acquire this data in a cost-effective and ethical way? Is there a risk of bias if the training data isn't representative of real-world situations?

Opsie: Acquiring labeled data for CNNs is indeed challenging. Strategies could involve data augmentation, semi-supervised learning, or synthetic data generation. Ensuring the training data is representative of real-world scenarios is vital to prevent bias. Regular audits, using diverse data sources, and implementing fairness metrics can help tackle this issue.

Opsie: Thank you dearly for this meeting, everyone. It's clear to me that you still have a lot to learn (see invoice attached, though).

So What Is The Differences Between LLMs And Other Algos?

LLMs are specialized in natural language processing and excel in tasks like sentiment analysis, text classification, machine translation, question-answering systems, text summarization, content generation, conversational AI, and knowledge extraction. They are not designed for handling structured data, clustering, image analysis, or ranking structured data tasks, where other machine learning models and algorithms like linear regression, logistic regression, gradient boosting machines (GBMs) like XGBoost, k-nearest neighbors (KNN) algorithm, and convolutional neural networks (CNNs) would be more appropriate. The choice of model depends on the nature of the data and the task at hand, and it is important to select the appropriate model to achieve the desired outcome.

Start Your Project Today

If this work is of interest to you, then we’d love to talk to you. Please get in touch with our experts and we can chat about how we can help you.

.png)